Saturday, August 15, 2015

Database Lifecycle Management - Dacpacs

https://www.simple-talk.com/sql/database-delivery/microsoft-and-database-lifecycle-management-dlm-the-dacpac/

The PowerShell scripts are well worth checking out and there are several interesting calls for SQLPackage along the way. I appreciated his findings with some of the issues - not always seeing changes that should be detected. I suspect that there's a setting not configured properly there, but couldn't say for sure. I know that I haven't hit any issues when using SQLPackage and dacpacs for normal DB development of the sort discovered at the end of the article.

The conclusion is spot on, whatever solution you use - check your scripts, test, and verify before just putting the solution into production.

Monday, July 20, 2015

Pre-deploy SQL Scripts before checking for changes

Here's the main article.

The general idea is that you add a SQL Script to your project with:

Build Action = "None"

Copy To Directory = "Copy Always" (or "Copy if Newer")

When you set up your publish actions, look for this script first, run it in PowerShell, SQLCMD, or whatever other option you might have, then run your SQLPackage commands as normal. It will run through whatever scripts you want to run first, then compare against the modified database to bring it up to date.

The idea is pretty clever, though I haven't come across a lot of need for it when trying to deploy DB changes. I'm not quite comfortable with a script running to change the state of the database to something unknown prior to running the script to bring it in line with version control. I suppose it has some use-cases, though, as I've come across this request several times on Stack Overflow.

Tuesday, August 26, 2014

SQL Projects & Change Data Capture

I ran across this article from MSSQLTips.com discussing the use of CDC with SQL Projects. We don’t use CDC with our current systems so I’d never tried to investigate this, but I would also have expected better support out of the box. In the meantime, Ray Barley wrote up a good article on enabling CDC for the Database and then using post-deploy scripts to enable CDC for tables.

Find the article here: www.mssqltips.com/sqlservertip/3316/how-to-enable-change-tracking-in-a-sql-server-database-project/

Monday, November 4, 2013

SSDT Issue–Online Index Option Ignored

Due to an issue with SSDT “working as designed”, the ONLINE=ON option is ignored as any of the criteria for comparing indexes within SSDT. On the positive side, it means that any index created that way won’t have the option dropped. On the negative side, it means that any index that is changed or added will not have that option.

If this is an issue to you, comment and vote on the related Connect ticket. If we can garner enough votes and interest, perhaps MS will hear us and change their mind about whether or not the ONLINE=ON should be part of the release script.

In the meantime, if you absolutely need this functionality, generate scripts for your releases and ensure that all index creates/alters include the option you want.

Edit: There's been some discussion about this on the MSDN forums around this. Kevin Cunnane posted several responses pointing to a Codeplex example of how this might be possible by compiling a DLL and referencing that through the SQLPackage command line. I have not had a chance to try that out yet, but am including the forum and Codeplex links for those who want to investigate further.

Forum post

Codeplex example

Tuesday, October 22, 2013

SSDT: Adding Logins for “Execute As”

We recently encountered an issue requiring us to use “Execute As” syntax in some of our stored procedures. Of course, we have multiple environments which all have different permission definitions so this would cause a bit of an issue. In this case, the login itself is standard in all of our environments, but we don’t define those within the project for the most part.

First problem: Add a new user called “MyLogin” to the project.

Solution: This was easy enough, I just added a new user to the project. However, I then ran into an issue that the Login for “MyLogin” did not exist in our master.dacpac reference.

Second Problem: Create a SQL Login reference within the master dacpac file that could be seen by all of our projects.

Solution: This proved to be a bit trickier. The model.xml file inside the default master.dacpac files contains many system-level objects. We would need to find the appropriate XML syntax for a new SQL Login element, add it to the model.xml file, and then update the hash for the dacpac file.

Extract the Model.xml file from our master.dacpac file

This can be easily done by finding your master.dacpac file that you referenced in your project and either renaming it to a .zip file or using a tool such as 7-zip to right-click the file and open it. Extract its “model.xml” file to some working folder.

Add a new SQL Login element to the model.xml file

Now that there’s a copy of model.xml to edit, open it in your favorite XML or Text editor. I like Notepad++, but even plain Notepad will work. Navigate to the very bottom of the file. We want to add the new element just before the </Model> tag. (for ease of use, not a requirement) The example XML is below. Note the “GloballyScoped” annotation type. This treats the entity as something that is available outside of the current database.

<Element Type="SqlLogin" Name="[MyLogin]"> <Property Name="Password" Value="MyPassword" /> <Annotation Type="GloballyScoped" /> </Element>

dacchksum.exe /i:Master.dacpac /u /v

This will change the checksum of your master.dacpac file so it will now work as a database reference.

Add your user to the project

Now that there’s a Login for the user to reference, you can now add the user to the project. Right-click the project, select “Add”, and then choose the “User” option. You’ll have a new User created which you can easily set up to reference the new login in your master file.

Overall, not my favorite way to do these things, but if I know that a login will exist on the server I am more comfortable with adding a user to the project than I would be otherwise. You could still run into issues if this login doesn’t exist everywhere you want to use the project.

Final Thoughts

As Gert mentions, this trick is for adding any object to master. If you have a copy of sp_whoisactive or sp_blitz that you want to reference for some reason, you can add them to some one-off project, then copy that XML Element node into your master’s model.xml, regen the hash, and enjoy a new globally referenced object. It’s a useful trick, though one I hope not to have to use very often.

Tuesday, May 14, 2013

SSDT: Setting Different Permissions per Environment

Attribution - I'm borrowing heavily from Jamie Thomson's ( blog | twitter ) post

A strategy for managing security for different environments using the Database Development Tools in Visual Studio 2010

Jamie addressed this problem for the older DB Projects (Datadude, VSTSDB, DBPro, etc.). He came up with an excellent, if somewhat complex, solution to effectively handle pushing different permissions to different environments. This is my attempt to update this and distill it into a "how to" for SSDT and SQL Projects, but I freely admit that this is based on Jamie's excellent work.

In general, the steps we'll want to take are:

- Generate a set of SQL Logins that will be used on the server.

- These should be run on the servers or local box already - we will not use these within the SQL Project itself though you can if you wish.

- Generate the files for users/roles/permissions for each database project and environment.

- Add the files to your SSDT SQL Project

- Create and set a variable for your environments in your SSDT SQL Project.

- Adjust your Security Wrapper file to handle your environments.

- Adjust your post-deploy script to call the Security Wrapper.

As you can see from the screenshot, we have a "Permissions" file containing granted permissions, a "RoleMemberships" file that adds users to various roles, a "Sales" file for the Sales schema, and 3 files each for the users - one for its schema, one for its login, and another for its user. Confusing, but not necessarily a problem until you need a release where User1 does not and will not exist on the server or where User2 belongs to a different domain than your production servers and can't and should not be created there because there's no trust relationship.

Let’s remove all of those files for objects other than Schemas from the project before we start. You’ll probably have to look at each file to verify before removing it from the project if you’re not sure which file is which. If you accidentally remove the files for the schemas, you’ll get errors and need to re-add a “Schema” to this security folder for each schema that is used.

Generate SQL Logins

This Microsoft KB article details the sp_help_revlogin stored procedure. Create this on your source database, run it, and use the output to re-create those logins elsewhere. This will copy all logins and users, but no permissions or database users.

Generate the Security Files through PowerShell

First, download the PowerShell_CreatePermissions.Zip file. Extract that to a folder on your hard drive.

Note - the script assumes that you will use Windows authentication to connect to your SQL Server. The script will need to be adjusted if you need to use SQL Authentication.

Edit the "GeneratePermissions.ps1" file. Replace the "DB1", "DB2", etc with whatever database names you'll be using. Also uncomment any of the extra lines that you'll need in the file. (There is likely a better way to do this in Powershell and I'm more than open to some suggestions on how to build up this array in a more concise manner.)

The assumption is made that the databasename == the projectname. If that's not the case, adjust your DB/Project names accordingly.

Open a PowerShell prompt in that folder after editing/saving the file.

Run the file using something like this commandline:

.\GeneratePermissions.ps1 -SQLInstance "localhost" -Environment DEV

"localhost" should be replaced with your servername

DEV in this case indicates that this will be tied to a variable value of "DEV" when the project is published.

I'd recommend running this for every environment at one time if you can. It will make adding the files to the project much easier.

If you run for several environments, you'll see a folder structure something like the following for each project (in this case for Adventureworks2012):

Inside your "SecurityAdditions" folder, you'll see the following files and folders:

Each of these files will be used to add permissions for each environment. In this case, we have permissions set for our Development, QA, Local, Production, and Staging environments.

Now, let's add the "SecurityAdditionsWrapper.sql" file from the files we extracted earlier into your "SecurityAdditions" folder. Feel free to edit it as needed to match the environment(s) you want to use for your database project. By default, it's set to look for the above files plus a "Default" file to be a catch-all. Adjust that so all files/environments match the files you created and save it.

Add the files to your SSDT SQL Project

Copy your newly created folder(s) to the appropriate project. Open each project, and you will notice that the files still don't show up. Click the project file. You should see a menu item that's now enabled to "show all files".

You'll see a bunch of files that aren't part of the project under a "Scripts" folder:

We want to add them to the project. Right-click the "Scripts" folder and select the option to "Include in Project". Click the Project file again and turn off the option to show all files.

- We need to set all file properties to "Not in Build" or there will be errors when trying to build the project.

Create and set your Variable to change environments

Open the Project Properties window

We want to change the SQLCMD variable to add a new variable called "DeployType". Set it's default value to "Local" for now, or whatever environment for which you have a set of security scripts.

Adjust your Security Wrapper files

The SecurityAdditionsWrapper.sql file in the code set I've created comes with settings for "Dev", "QA", "Staging", "Production", "Local", and "Default". It's likely that you will not use all of these in your setting. One drawback to using SQLCMD to wrap all these files together is that if you don't have a file or set of files, the script will start throwing errors because an expected file doesn't exist. Edit the SecurityAdditionsWrapper.sql file to remove any of the configurations you will not use or change the names to match the environment values you used earlier.

Adjust your Post-Deploy script

Finally, we need to open your Post-Deploy script.

Add the following to your script:

:r .\SecurityAdditions\SecurityAdditionsWrapper.SQL

This will include your SecurityAdditionsWrapper file as part of your post-deploy process. See my earlier post on selectively running pre/post deploy scripts if you want finer control.

SSDT: Trick to Not Run Pre/Post SQL on Publish

We’ve run across the need to avoid running certain scripts when publishing a brand new copy of a database from a SQL or DB Project from time to time. Often, those pre/post deploy scripts are designed to be run only against an existing system and don’t make sense for a brand-new, empty database.

We’ve worked around this by using a SQLCMD variable called DeployType and setting that variable to “New” for any build operation that will be used only to create the initial database. The code snippet for our Post-Deploy script looks something like the following:

-- Place scripts here if they should only run for existing/working DB

IF ( '$(DeployType)' <> 'New' )

BEGIN --Run scripts

PRINT 'Deploying scripts.'

END

--Security - runs last

:r .\SecurityAdditions\SecurityAdditionsWrapper.sql

The above script will look at the DeployType variable. If it’s set to “New”, it will ignore all of your deploy scripts in that block. If it’s set to any other environment, it will run the appropriate scripts if any exist. You can use this to control which environments will use certain scripts as well.

Wednesday, January 23, 2013

SSDT, Publishing, and Referenced Databases

In my haste to get the projects working, I added a database reference to the current project. I would build my project and reference the generated *.dacpac files when publishing. The problem is that every time I built my project, the referenced dacpac file would overwrite the generated dacpac file.

Results from this problem - I noticed that my "DeployType" variable wasn't referenced anywhere, which struck me as odd because I use it in my Pre and Post Deploy scripts. I also started noticing that my dacpac file in my build folder was out of date, even after a successful build.

Workaround - do what I have done otherwise - replace all instances of CurrentDatabaseName.dbo. with just dbo. in all SQL files in the project. This also includes the various forms that this can take:

Databasename.dbo.

Databasename..

[Databasename].[dbo].

etc

Once I replaced all of those references and rebuilt the project successfully, my publish actions started working again. My Deploy scripts were included. I didn't get mysterious warnings about my variables not existing.

There may be a different way to work around self-referenced database objects within SSDT, but until I come across that, the workaround is to remove them from the code and not to just add a reference to the current database through a dacpac file.

Monday, November 12, 2012

SSDT: Tips, Tricks, and Gotchas

I wanted to add a short post to make sure I highlight some things that will trip people up or otherwise cause issues.

- When setting trigger order for triggers on a table, you could run into an issue with Publishing your database. The project will build successfully, but throw a "Parameter cannot be null" error for parameter "Key". This is an internal bug with the product as of at least SQL 2012 CU2. It's been entered as an internal bug within MS with a workaround. To workaround the issue, do not try to set the trigger order in the table definition, but rather put this code in a post-deploy script.

- This is supposed to be fixed in a future release of SSDT so your experience may vary.

- Importing encrypted objects may fail. If you want to store encrypted objects in your project, you'll likely need the code in order to create them in the project.

- Unlike the Schema Compare option in VS 2010, there is no way to set the default Schema Compare options nor a way to filter out "skipped" objects in the schema compare.

- I’d love some ideas on how better to handle this. You may be able to save the compare within the project for future re-use, but I had little success with this in VS 2010 Projects so have been reluctant to try that route again.

- If your database uses FILESTREAM, you will not be able to debug using the default user instance that SSDT provides. You will need to point your debugging instance to a SQL Server install that supports FILESTREAM. See this forum post for more details.

- Set this in the Project Properties and point to an actual instance of SQL Server that supports the feature(s) you want to use.

- SQL Projects will substitute CONVERT for CAST in computed columns at the time of this writing. They also push default constraints with extra parentheses around the default value. If you clean these up from the target server, be aware that on your next Publish action, you could end up with extra work being done to make your target database use those parentheses or the CONVERT function.

- To work around this, do a SQL Schema compare after a release to find any areas where the schema on the server differs from that in the project. For instance you may see a DEFAULT (getdate()) in your server, but have DEFAULT getdate() in your project. Add the parentheses to your project to avoid unnecessary changes.

Do you have any tips to share? Add them to the comments below.

Friday, November 9, 2012

SSDT: SQL Project Snapshots

This builds a dacpac file into a folder within the project called "Snapshots" with a default name format of Projectname_yyyymmdd_hh-mi-ss.dacpac.

This file contains a build of the project at the time the snapshot was taken, including all objects and scripts.

Uses (by no means a complete list)

- Save a specific version of your project to use for release

- Save this version of the project before making changes to the underlying project

- Use as a source for schema compare

- See the differences between snapshots and/or the current project through schema compare

- Baseline your project

- Roll back to this state

- Schema Compare with the snapshot as the source and the Project as the target

- Import into a new project with this as the source.

- Import into current project, but be aware that this could easily produce a lot of duplicates

Thursday, November 8, 2012

SSDT: Publishing Your Project

Build the project

In order to successfully publish your project, it must first be able to build successfully.

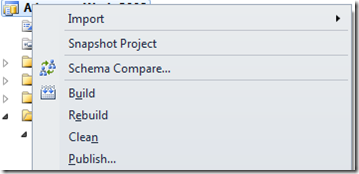

Start by building your project. Right-click the project and select "Build".

If the build is successful, the project can be published.

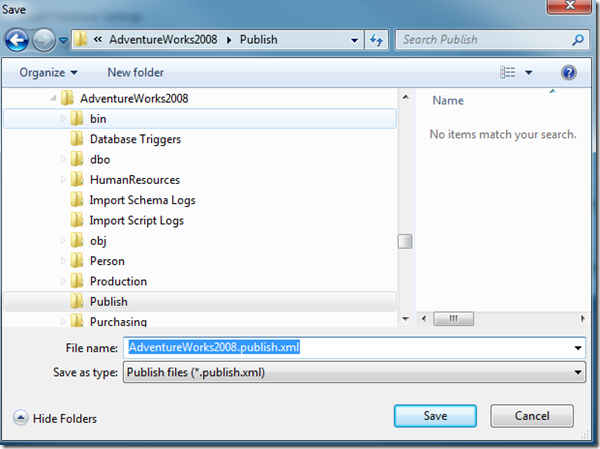

You may want to create a folder within your project to store saved Publish Profiles. These can be used later to easily publish the project to your servers.

Creating Publish Profiles

Right-click the project and select Publish. This will bring up the Publish Database dialog.

- Choosing to publish or opening a saved publish profile will initiate a build of the project.

Choose your target database, set your advanced options (similar to Schema Compare options), and choose the "Save Profile As" option to save this to a location within your project. Selecting the "Add profile to project" option will create the publish profile in the root of the project. You may wish to either move the file to a folder storing all of your publish profiles or, if you saved it without adding to the project, show all files of the project so you can include the file in the project.

Some options you may want to consider:

- "Always re-create database" - this will re-create the database. Any data in the database will be lost.

- "Block incremental deployment if data loss might occur" - If there are any changes that could result in the publish action failing because of data loss, this option will stop the script from running.

- "DROP objects in target but not in project" - This will remove anything in the database that doesn't exist in the project. Useful if you want consistency, but you may want to ensure this isn't checked if there could be objects in the database that were created, but didn't make it to the project.

Under the "Advanced Deployment Options"

- Allow Incompatible Platform - Useful if you may publish to a different version of SQL Server than the one specified in the project

- Include transactional scripts - Will run the entire update operation as a transaction. If any one part fails, the transaction will roll back. If you have cross-database dependencies, selecting this option could result in no changes being published if you're publishing to a new server. For a new publication, you may want to de-select this option to ensure a successful deploy of what can be published.

- Script state checks - This option will ensure that the publish action will only work on the specified server and database.

- Verify deployment - Checks the database and project before publishing to try to ensure there are no changes that will cause problems with the publication such as missing data for a foreign key.

Using Publish Profiles

Once you've set up your publish profiles, you can easily use these to push changes to that server and database without needing to specify additional parameters. The easiest way to use them is to double-click the Publish Profile within the project and choose to either "Generate Script" or "Publish".

Generate Script will generate a script for you to use to update the target at a later time (run in SQLCMD mode).

Publish will immediately attempt to push the changes to the target.

You can also use these at a later point to push changes through the SQLPackage.exe command line.

SQLPackage

To publish your package through a command line we use something like the following:

- sqlpackage /a:publish /sf:.\sql\Local\Adventureworks2008.dacpac /pr:.\Publish\Local.publish.xml

The above will:

- Use the "Publish" Action

- Use the Source File named Adventureworks2008.dacpac, built in the sql\Local folder

- Use the publish profile named "Local.publish.xml" (defined to push to the local SQL Server)

You may want to add SQLPackage.exe to your path. By default it is installed in:

C:\Program Files (x86)\Microsoft SQL Server\110\DAC\bin

You can override quite a few of the default settings through various command line arguments. This includes source, target, and variables. You can find a full list of the command line arguments at the SQL Package reference online.

Jenkins Automation for CI

We use Jenkins at my current workplace and set up a Jenkins job to do the following (With thanks to Matthew Sneeden for the assistance.):

- Get the latest from our mainline repository

- Build each SQLProj file.

- Building the SLN file will result in also attempting to publish the database

- msbuild .\Adventureworks.sqlproj /t:build /p:Configuration="Local"

- This assumes that msbuild.exe is in your path.

- Configuration is mostly to control the location of the dacpac file generated.

- Run SQLPackage w/ a specified Publish Profile for the appropriate environment and using the newly built dacpac as the source.

We are currently investigating how we can use Snapshot files to better control releases to our UAT and Production environments. This series will be updated when that information is available.

Wednesday, November 7, 2012

SSDT: Errors and Warnings

SSDT includes an Errors and Warnings window that is well worth your attention. Ideally, your project should have no errors or warnings.

However, sometimes coding errors slip in to your project or you get warnings that an object can't be found because it exists in another database. Sometimes a warning might appear because an object is missing completely, in this project or another one. In these cases, it's well worth checking this section to find out where you may have some issues.

Warnings are not necessarily a problem. SSDT will bring possible issues to your attention, but warnings will not stop a project from building and publishing unless you have set the option to treat warnings as errors or unless there really is an underlying problem that causes an issue during the publish phase.

For example, if I modify the Person.Address table to add a new column in the code, but forget to add a comma, I'll get an error something like this.

If you double-click on the line, the editor should open the appropriate file and take you pretty close to your problematic line. Correct the problem, save the file, and move on to the next error.

Some common warnings/errors

- 4151 - Unresolved database reference. This is often caused when one of the objects in a database references another. This can often be resolved by creating a database reference.

- 71562 - Unresolved database reference warning.

- 71502 - Another unresolved database reference warning.

How to globally suppress certain warnings

- Right click the root of the project and select properties

- Click on the "Build" tab

- Enter in the numeric portion of the codes, separated by commas. Remove the "SQL" and any leading zeroes when entering the code.

Tuesday, November 6, 2012

SSDT: Updating a Project Manually

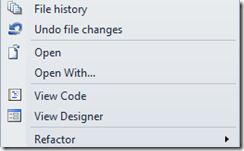

If you know the name and location of the file you want to edit, just go straight to it and right-click it.

You have two ways to edit the file - using "View Code" will bring up a T-SQL script. Edit as you would any other T-SQL script and save. Remember that SSDT scripts create all base objects using CREATE scripts.

If you choose to View Designer, you'll see a new screen combining a design view with a T-SQL editor.

Here you can choose to edit within either window. The code will be kept in sync across the panels. You can right-click any of the Keys, Indexes, Triggers, Constraints in the upper window and choose to add a new one. You'll get a shell of the script to create a new object tied to this table. Modify its code to match what you want to do and save the file.

- This is different behavior from the older Database Projects. Those would create separate files for each object by default. In SSDT, the scripts are all put together in one script unless you upgraded from a DB Project.

- The only place SSDT supports "GO" to break up the batches is within these create scripts. You cannot use GO in a post or pre deploy script.

- If you highlight a column in the table, you can give it a description in the Properties window. This is an easy way to propagate object/column descriptions into your SQL Server.

- You can select the table's properties in the Properties window dropdown to edit its properties/description.

SQL Server Object Explorer

If you hit the F5 key inside of a project to debug the project, SSDT will build a local database, often hosted in an instance called (localdb)\Databasename. This will run the database project in a debug session. If you then open the SQL Server Object Explorer view, you can edit the project and underlying scripts.

This works in most instances where you’re using basic SQL Server functionality. If you’re using any more advanced features such as Filestream, you’ll want to change the location for this debug instance. You can change these in the project properties.

Double-clicking the HumanResources.EmployeePayHistory table above brings up the editor for the underlying table in the project.

Monday, November 5, 2012

SSDT: Updating a Project by Importing Scripts

Find your script or scripts.

Select your options for import:

If you see the following text in your log, be sure to check this file to see if something was missed on import. You'll need to manually make these changes, if applicable. In a lot of cases, the statements not understood tend to be "GO" statements.

“In the script that you provided to the import operation, one or more statements were not fully understood. These statements were moved to the ScriptsIgnoredOnImport.sql file. Review the file contents for additional information.”I've also found that "ALTER TABLE" statements are not well understood by SSDT within the imported scripts. If you get several scripts that include these and aren't understood, you can either compare the physical database to the project or manually update the project. (This has been acknowledged by Microsoft as “working as designed” even if we might wish that this could actually change the script for the object instead.)

Friday, November 2, 2012

SSDT: Updating by Using Schema Compare

If you are using VS2010, there are two options for SQL and Data.

- The "Data" menu is used for the older DB Projects within VS2010. There's a useful data compare option in there, but the schema compare will not work for SQL 2012 or SQL Projects.

- The "SQL" menu contains the Schema Compare item with a sub-item to do a new Schema Comparison

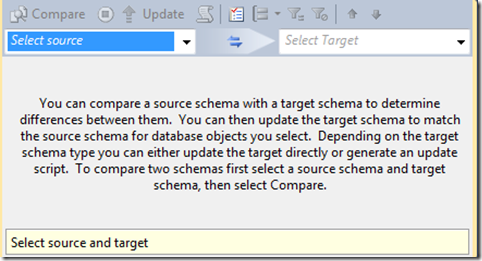

To update your project from a shared database, start a "New Schema Comparison". You'll see a screen something like this:

Setting your source/target is pretty straightforward and each will produce a screen something like the following:

You can choose to compare against an open project, a live database, or a Data-tier Application File (dacpac). In our case, we are going to select a live database as the source and our project as the target.

Once selected, you may want to change the options to exclude certain object types or ignore certain settings such as file placement. Unlike in VS2010, the options cannot be set to some default at this time. When you've set the options to your liking, click the "Compare" button.

If there are any differences, you'll see a list of them here. The comparison window shows where there are differences between the source and target. This can be really helpful to know whether or not to include this change in the update.

To exclude a change, clear the checkbox next to it in the upper section of the window. A green + indicates that this object will be created. A red - indicates that the object will be dropped. The blue pencil indicates that the object will be modified. At this time there is no way to hide any unchecked items that show in the compare.

Once you're satisfied with your selection, click the "Update" button to push those changes into your project.

Thursday, November 1, 2012

SSDT: Pre and Post Deploy Scripts

These scripts are not created by default in a new SSDT project. To organize your scripts, you may want to create a folder structure similar to the following.

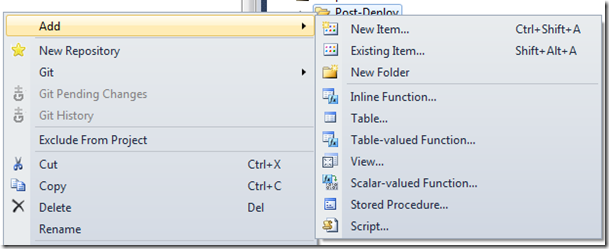

If you right-click the Post or Pre Deploy folders, you can choose to add a Script item.

You will then get a choice of which type of script to include. Choose Pre or Post deployment, as appropriate, and name the file accordingly.

You can only have one Pre-Deployment script and one Post-Deployment script per project as part of the Build! All included scripts must be set to “Not in build” or you will likely have errors.

If you look at the properties of these scripts, you will see that the Build Action is set to PreDeploy or PostDeploy. Opening the Post-Deploy script will show this default text.

/*

Post-Deployment Script Template

-------------------------------------------------------------------------------------- This file contains SQL statements that will be appended to the build script. Use SQLCMD syntax to include a file in the post-deployment script. Example: :r .\myfile.sql Use SQLCMD syntax to reference a variable in the post-deployment script. Example: :setvar TableName MyTable SELECT * FROM [$(TableName)]

--------------------------------------------------------------------------------------

*/

Using Pre and Post Deploy Scripts

These files are interpreted as SQLCMD scripts by SSDT. You can use most valid SQLCMD syntax within the script file to include other scripts and set variables. At the time of this writing, you cannot use the :ON ERROR IGNORE command within a script. This will hopefully be addressed in a future release of SSDT.

Pre-Deploy scripts will always run before the schema changes are run. If you alter the database in a way that changes the schema, you may encounter errors with the release. E.g., if you make schema changes to the Person.Person table, but drop that table in a Pre-Deploy script, your publish action will likely fail.

Likewise, Post-Deploy scripts will always run after the publish action. This makes them a great place to insert new data, make small adjustments to the system, or perhaps implement custom security and permissions. The caveat to using post-deploy scripts to make changes is that the changes need to be repeatable. If you write an insert statement for a new lookup value, that same insert will run next time unless you check for the existing value first.

To create a new script to run in pre or post deploy:

- Right-click the appropriate folder (Pre-Deploy, Post-Deploy, other)

- Choose to add a "script" and select the "Script (Not in build)" option

- Give it a name, preferably one without spaces as it will make it easier to run the pre or post deploy script.

- Add your code changes. You may want to give it some descriptive comments and sometimes a Print statement can be helpful if you want to see progress as you run a deploy script manually.

- Make sure that you can re-run this script without causing errors! Check for data that may already exist, use outer joins, wrap your code in Try/Catch - whatever you need to do to make sure that you can have this run again if necessary.

- After saving the script, edit your pre or post-deploy script and add a new line in a non-commented area something like:

- :r .\MyScript.sql

- This assumes that your script is in the same folder as your pre or post-deploy scripts. Adjust the relative paths as needed for the script you created.

- Save your deploy script.

The next time you publish your project, it will pick up this script and include it in your change set. If you choose to create a script on publish, you can see the full text of your scripts included in the pre or post-deploy section of your change script.

Cleanup of Pre and Post Deploy Scripts

There are several options for cleanup, but one of the best suggestions I've seen has been to generate project snapshots and then remove the script references from your pre/post deploy scripts and the script files themselves from the project. They will still be saved in the snapshot, but will not be in your project anymore. You may be able to manage this well through your Version Control System, but snapshots do have some advantages.

Pros:

- Your project will remain somewhat clean.

- Script files will be saved with their appropriate snapshot, ideally tied to a particular release.

- Less concern about whether a script is replayed because it's removed.

- Good for scripts that have a larger impact and should only be run one-time.

Cons:

- It's a manual process and requires some attention to detail.

- You need to look in the snapshots to see what scripts were used.

- It may require that you have a more formalized release process.

- You may need to publish several snapshots to bring the database up to a current version.

Wednesday, October 31, 2012

SSDT: External Database References

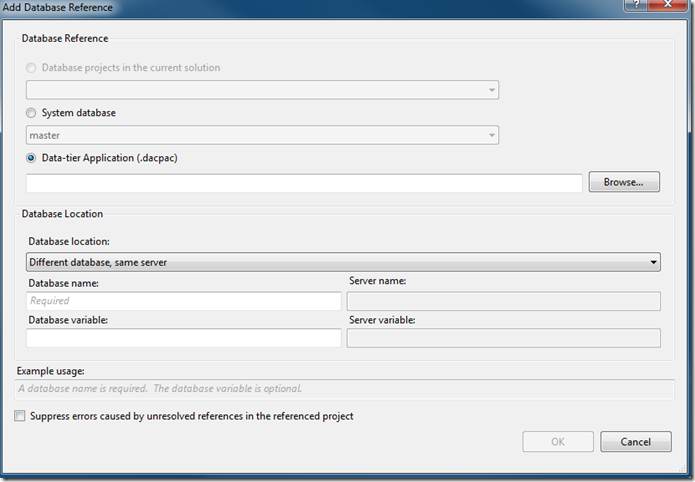

To add a Database Reference to your project

- Right-click the "References" folder in your project or choose the "Project" menu and select the option to "Add Database Reference"

- Browse for your dacpac file

- Choose the appropriate database location. (Same, Different DB / Same Server, or Different DB / Different Server)

- If your database name doesn't change, clear out the "Database Variable" column. It isn't immediately obvious, but doing this will let you keep referencing your database in the same manner you do currently.

- If this database name can vary, populate the Database Variable field. You'll need to replace this reference in your code with the variable from this column.

- If you're referencing a database on a linked server, this would probably be better served with a static database name and a "Server Variable". Make sure that server variable is set appropriately when you publish the database.

- This should be addressed with a future release of SSDT.

- You may choose to suppress errors if there are unresolved references in the project. This is useful if your dacpac file could be out of date. You can still code against an object you know to exist, but isn't in the reference file yet.

- If you use Linked Servers, you’ll probably want to use the Server Variable to ensure that you can release your code in different environments.

Some Best Practices and Lessons Learned

- Make a shared folder to store your dacpac files in a common place. This should be easily accessible from all of your SQL Projects.

- If you use C:\DatabaseProjects as your base for all SQL Server projects, create a folder in there to store your shared dacpac files.

- Because all paths are relative to the project, you may need to copy the master/msdb dacpac files that ship with SSDT into your shared folder. You can find these files in your Visual Studio folder in a path similar to the following.

Drive:\Program Files (x86)\Microsoft Visual Studio 10.0\Common7\IDE\Extensions\Microsoft\SQLDB\Extensions\SqlServer\VersionNumber\SqlSchemas

- If you support multiple SQL Server editions, you may want to rename master/msdb files with their user-friendly version number. E.g., the master.dacpac file in your "90" folder could be renamed to master2005.dacpac

- This should be addressed in a future release of SSDT and could already be fixed.

- If you use variables, don’t forget to replace any TSQL with the variable in the form of [$(VariableName)].

- Square braces may not be necessary, but in most cases, they’ll work as expected for DB or Server names.

- If you need to reference a dacpac that is already in use, you’ll need to create a copy of it. This comes up more often if you’re referencing a replicated database for which you already have a production dacpac.

- Choose good variable names for the Server variable name.

- Don’t forget to set the variables in the Project and/or Publication properties!

Tuesday, October 30, 2012

SSDT: SQL Project Options

Project Settings

Here you can set your normal target platform (SQL 2005, 2008, 2012, or Azure).

You can enable the T-SQL verification option, but this is mostly for Azure projects.

If you will generally output to a script, choose the "Create script" option. On the whole, just using the dacpac file will likely provide greater flexibility in the long run as it can be adapted to a different target. Generating a script will only be guaranteed to work against the target used to generate the script.

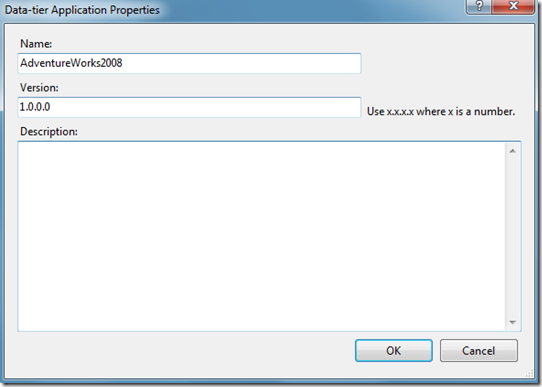

You can also set the dacpac file properties, which sadly do not do much when used against a database not using these as data-tier applications. There is some talk of enhancing the projects to use this version number and description somewhere within the database, but right now they are pretty much only there for you to populate if you wish.

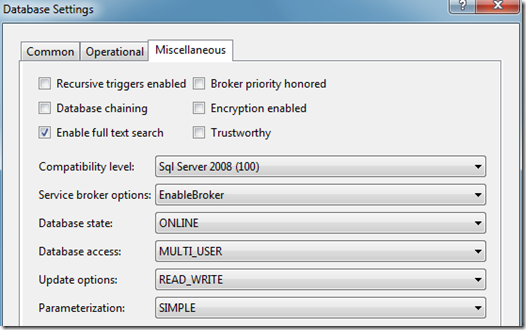

You can also set the Database Settings for your project. These options are the normal ones you would set when setting options in SQL Server. You can choose to publish these to your targets so set these to your preferences. (More on that in a future post.)

I'm going to bypass the SQLCLR and SQLCLR Build tabs as I am not familiar with them and don’t currently use either in our system.

Build

The Build tab is the place to specify which warnings to ignore, the output path for your files when you build the project, and the name of the file. You may want to specify certain warnings to ignore, especially if you use cross-database projects or any references to system databases. Those can often result in warnings about missing objects, even though they'll work perfectly fine when you publish them.

- Note that the warnings are set per configuration. If you use multiple configurations be sure to set the warnings in each one.

![clip_image006[1] clip_image006[1]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEhfbk_oc1J3UI_jzgxwLQ9NO_pHXi381-IfYSUVierioEcDTDFw94WqXkCDpa0t7TX546Gl-Ydo1f3JDbfX_FBOplJkc5q_ebp5ihBEf5kGkprHM5ICCwD1N8xbco_VjiIQ1o1-1Q_2hfY/?imgmax=800)

SQLCMD Variables

SQLCMD variables can be used throughout the project as placeholders for values passed in at publication time. For example, you may choose to change the way your scripts run based on your Development, Customer Acceptance, or Production environments. Define these variables here and use them wherever applicable in the project by inserting them as $(DeployType). SSDT will substitute them with the proper value on publication.

Build Events

Build events can be set up to run certain commands before and after the project is built. You can also choose to run the Post-Build event commands only when the project builds successfully or on every build.

Debug

Start Action and Target Connection String should make sense for those who need to change them. One item to note is that the debug action defaults to a SQL Express type instance of your database that is released to (localhost)\DBName. This will run within SSDT so you can try out your T-SQL before committing the changes in the project.

** Note that if you use FILESTREAM, you will not be able to use these user instances. You will need to change your debug database to a SQL Server install.

In your Deployment Options, you have a screen that looks something like this:

"Block incremental deployment" is one to be aware of. If you run into any constraints, refactoring, data type changes, NULL to NOT NULL, or similar changes, you could have your debug action stop unexpectedly. It can be really useful to avoid dropping columns that could contain data, but if you are aware of the changes, this option could also get in the way of normal releases. According to the MS documentation, this will only block the change if there is data in the table.

"DROP Objects in target but not in project" can be useful to make sure that everyone is running with what's in the project and that they don't have leftover objects that could interfere with testing. However, it's also possible to lose a bunch of work if you publish a project with this option against your local instance containing the stored procedure you've worked on for the last 2 weeks.

"Advanced" contains a lot of different options about what to compare and how to generate the changes. Some of the more interesting options include:

- Allow incompatible platform - this will let you push your changes to a version of SQL Server other than the one specified in the project properties. The changes will fail if you try to do something not allowed in the target, but useful in case you have your project set to the lowest version supported.

- "Drop xyz not in source" - these options control what will be dropped from the target if it's not defined in the source. The default options are likely good for most users.

- "Ignore xyz" - defines what should be ignored when comparing the project against the target. You may want to ignore details about the partition schemes, or what permissions are set in the target

- "Include Transactional Scripts" will set up the release script as transactions when publishing the database. This is useful when you want to make sure that if one part of the publish action fails, the change script is rolled back.

- "Verify Deployment" - stops the publish actions if the script could run into some problems.

Reference Paths

This section is used to modify and maintain the various Server & Database variables used with cross-database references. See the "External Database References" page for more detail.

Code Analysis

This option can be useful to check for common issues such as using reserved words, non-standard characters in object names, or using "select *" in your queries. You can also specify whether to show these as errors or warnings. Set appropriately for your environment.

Monday, October 29, 2012

SSDT: Importing an Existing Database

Import Database into your project by right-clicking the project name or selecting the Project Menu and selecting the Import -> Database option. Note that you can also import from SQL Scripts or an existing dacpac file. One of those may be necessary if you don't have direct access to your source server.

You'll then be asked to choose your project name, location, folder structure, and what to import. You can choose the defaults if you want. If you have an intricate permissions structure, you may want to import the permissions. If your logins, users, and permissions tend to vary by environment, you may want a different way to handle those. That will be covered in the "Permissions" section.

You'll have several options for folder structure. I'd choose the recommended structure unless you have a reason to choose something else. That will structure all of your objects under their appropriate schema, by object type. If you have a relatively simple database model, you may choose to structure your project by schema alone, object type alone, or just put everything in the root of the project. This only affects your project file structure, not your database structure.

Once you're satisfied with your settings, click the "Start" button to begin populating your project.

Importing From a Dacpac File

SSDT supports importing from a dacpac file. This can be useful if you're working on a project for a database to which you do not have direct access in order to import the schema directly. These files also can be used as external database references if you work in an environment where multiple databases interact.

In order to create a dacpac file, you have a couple of options.

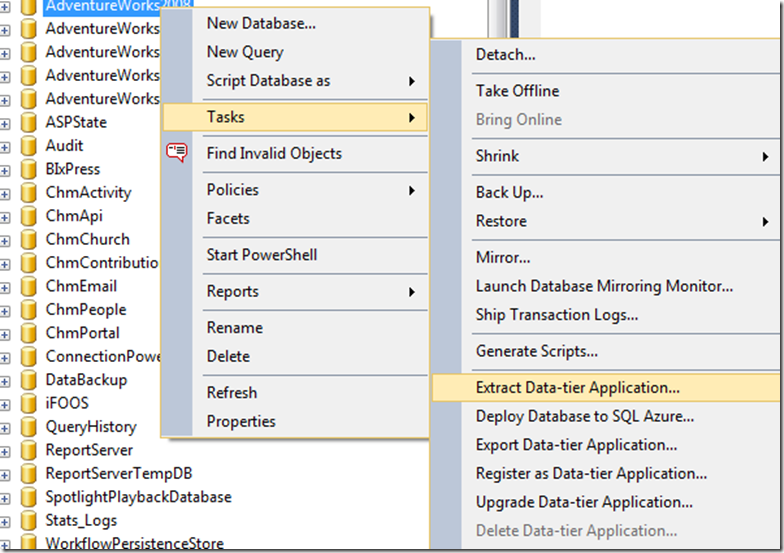

You can generate a dacpac file from a SQL Server database using SSMS 2012. Right-click the database you wish to use. Select Tasks, then "Extract Data-tier Application…".

Set the file location and any other options you want to use.

Click next. Verify the settings. Click Next again. The system will now generate a dacpac file in the location you specified.

Note that choosing the "Export Data-tier Application" will create a bacpac file. This is used to backup all schema and data in a database so it can be published to an Azure database. You cannot use a bacpac file to import schema into your project.

Generating a dacpac using SQLPackage.exe

You can always use the SQLPackage command line to extract the DB Schema into a dacpac file. For a complete list of parameters, see http://msdn.microsoft.com/en-us/library/hh550080%28v=VS.103%29.aspx.

For a quick extraction from a trusted server, you can run something like the following:

This command uses an "Action" to Extract the file, with the SourceServerName of localhost, a SourceDatabaseName of Adventureworks2008, and a TargetFile of Adventureworks2008.dacpacCode Snippet

- Sqlpackage.exe /a:extract /ssn:localhost /sdn:Adventureworks2008 /tf:Adventureworks2008.dacpac