One of the areas that SSDT doesn't address adequately is permissions. Many users have different users, logins, and permissions set up across their environments, but SSDT takes a "one size fits all" approach and assumes that you will have the exact same configuration in each environment. While that should be the case for your schema, this area requires some interesting and somewhat involved techniques to handle well in SSDT.

Attribution - I'm borrowing heavily from Jamie Thomson's (

blog |

twitter ) post

A strategy for managing security for different environments using the Database Development Tools in Visual Studio 2010

Jamie addressed this problem for the older DB Projects (Datadude, VSTSDB, DBPro, etc.). He came up with an excellent, if somewhat complex, solution to effectively handle pushing different permissions to different environments. This is my attempt to update this and distill it into a "how to" for SSDT and SQL Projects, but I freely admit that this is based on Jamie's excellent work.

In general, the steps we'll want to take are:

- Generate a set of SQL Logins that will be used on the server.

- These should be run on the servers or local box already - we will not use these within the SQL Project itself though you can if you wish.

- Generate the files for users/roles/permissions for each database project and environment.

- Add the files to your SSDT SQL Project

- Create and set a variable for your environments in your SSDT SQL Project.

- Adjust your Security Wrapper file to handle your environments.

- Adjust your post-deploy script to call the Security Wrapper.

First, let's look at what happens when you import Permissions by default into an SSDT SQL Project.

As you can see from the screenshot, we have a "Permissions" file containing granted permissions, a "RoleMemberships" file that adds users to various roles, a "Sales" file for the Sales schema, and 3 files each for the users - one for its schema, one for its login, and another for its user. Confusing, but not necessarily a problem until you need a release where User1 does not and will not exist on the server or where User2 belongs to a different domain than your production servers and can't and should not be created there because there's no trust relationship.

Let’s remove all of those files for objects other than Schemas from the project before we start. You’ll probably have to look at each file to verify before removing it from the project if you’re not sure which file is which. If you accidentally remove the files for the schemas, you’ll get errors and need to re-add a “Schema” to this security folder for each schema that is used.

Generate SQL Logins

This Microsoft KB article details the sp_help_revlogin stored procedure. Create this on your source database, run it, and use the output to re-create those logins elsewhere. This will copy all logins and users, but no permissions or database users.

Generate the Security Files through PowerShell

First, download the

PowerShell_CreatePermissions.Zip file. Extract that to a folder on your hard drive.

Note - the script assumes that you will use Windows authentication to connect to your SQL Server. The script will need to be adjusted if you need to use SQL Authentication.

Edit the "GeneratePermissions.ps1" file. Replace the "DB1", "DB2", etc with whatever database names you'll be using. Also uncomment any of the extra lines that you'll need in the file. (There is likely a better way to do this in Powershell and I'm more than open to some suggestions on how to build up this array in a more concise manner.)

The assumption is made that the databasename == the projectname. If that's not the case, adjust your DB/Project names accordingly.

Open a PowerShell prompt in that folder after editing/saving the file.

Run the file using something like this commandline:

.\GeneratePermissions.ps1 -SQLInstance "localhost" -Environment DEV

"localhost" should be replaced with your servername

DEV in this case indicates that this will be tied to a variable value of "DEV" when the project is published.

I'd recommend running this for every environment at one time if you can. It will make adding the files to the project much easier.

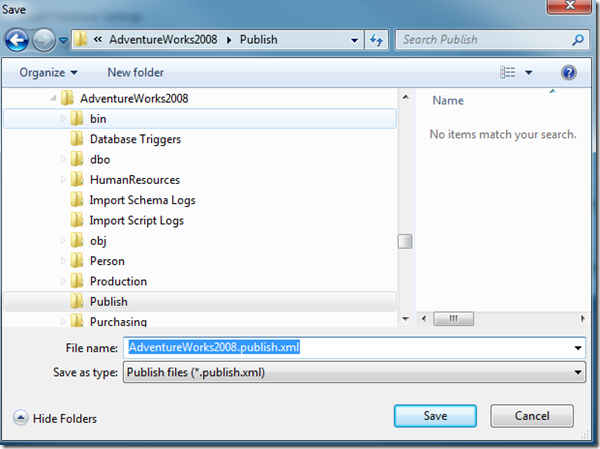

If you run for several environments, you'll see a folder structure something like the following for each project (in this case for Adventureworks2012):

Inside your "SecurityAdditions" folder, you'll see the following files and folders:

Each of these files will be used to add permissions for each environment. In this case, we have permissions set for our Development, QA, Local, Production, and Staging environments.

Now, let's add the "SecurityAdditionsWrapper.sql" file from the files we extracted earlier into your "SecurityAdditions" folder. Feel free to edit it as needed to match the environment(s) you want to use for your database project. By default, it's set to look for the above files plus a "Default" file to be a catch-all. Adjust that so all files/environments match the files you created and save it.

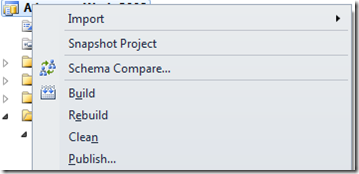

Add the files to your SSDT SQL Project

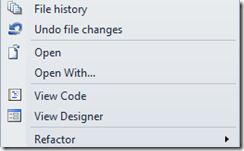

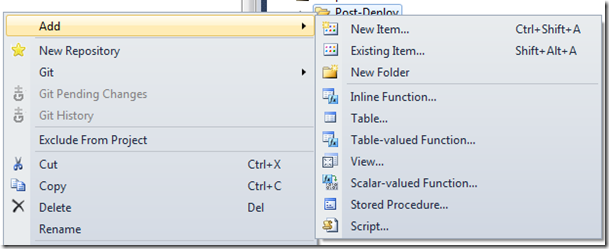

Copy your newly created folder(s) to the appropriate project. Open each project, and you will notice that the files still don't show up. Click the project file. You should see a menu item that's now enabled to "show all files".

You'll see a bunch of files that aren't part of the project under a "Scripts" folder:

We want to add them to the project. Right-click the "Scripts" folder and select the option to "Include in Project". Click the Project file again and turn off the option to show all files.

- We need to set all file properties to "Not in Build" or there will be errors when trying to build the project.

Unfortunately, we can't select just the folder to exclude from the build. We need to select the files. You can ctrl+click to select multiple files. Select all of the files, open the Properties tab, and change the option from "Build" to "None"

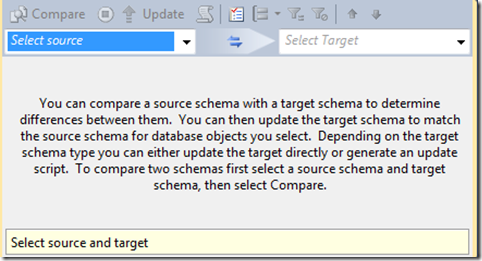

Create and set your Variable to change environments

Create and set your Variable to change environments

Open the Project Properties window

We want to change the SQLCMD variable to add a new variable called "DeployType". Set it's default value to "Local" for now, or whatever environment for which you have a set of security scripts.

Adjust your Security Wrapper files

Adjust your Security Wrapper files

The SecurityAdditionsWrapper.sql file in the code set I've created comes with settings for "Dev", "QA", "Staging", "Production", "Local", and "Default". It's likely that you will not use all of these in your setting. One drawback to using SQLCMD to wrap all these files together is that if you don't have a file or set of files, the script will start throwing errors because an expected file doesn't exist. Edit the SecurityAdditionsWrapper.sql file to remove any of the configurations you will not use or change the names to match the environment values you used earlier.

Adjust your Post-Deploy script

Finally, we need to open your Post-Deploy script.

Add the following to your script:

:r .\SecurityAdditions\SecurityAdditionsWrapper.SQL

This will include your SecurityAdditionsWrapper file as part of your post-deploy process. See my earlier post on

selectively running pre/post deploy scripts if you want finer control.